Since 2011, experimental social psychology is in crisis mode. It has become evident that social psychologists violated some implicit or explicit norms about science. Readers of scientific journals expect that the methods and results section of a scientific article provide an objective description of the study, data analysis, and results. However, it is now clear that this is rarely the case. Experimental social psychologists have used various questionable research practices to report mostly results that supported their theories . As a result, it is now unclear which published results are replicable and which results are not.

In response to this crisis of confidence, a new generation of social psychologists has started to conduct replication studies. The most informative replication studies are published in a new type of article called registered replication reports (RRR)

What makes RRRs so powerful is that they are not simple replication studies. An RRR is a collective effort to replicate an original study in multiple labs. This makes it possible to examine generalizability of results across different populations and it makes it possible to combine the data in a meta-analysis. The pooling of data across multiple replication studies reduces sampling error and it becomes possible to obtain fairly precise effect size estimates that can be used to provide positive evidence for the absence of an effect. If the effect size estimate is within a reasonably small interval around zero, the results suggest that the population effect size is so close to zero that it is theoretically irrelevant. In this way, an RRR can have three possible results: (a) it replicates an original result in most of the individual studies (e.g., with 80% power, it would replicate the result in 80% of the replication attempts); (b) it fails to replicate the result in most of the replication attempts (e.g., it replicates the result in only 20% of replication studies), but the effect size in the meta-analysis is significant, or (c) it fails to replicate the original result in most studies and the meta-analytic effect size estimates suggests the effect does not exist.

Another feature of RRRs is that original authors get an opportunity to publish a response. This blog post is about Fritz Strack’s response to the RRR of Strack et al.’s facial feeback study. Strack et al. (1988) reported two studies that suggested incidental movement of facial muscles influences amusement in response to ratings of cartoons. The article is the second most cited article by Strack and the most cited empirical article. It is therefore likely that Strack cared about the outcome of the replication study.

So, it may have elicited some negative feelings when the results showed that none of the replication studies produced a significant result and the meta-analysis suggested that the original result was a false positive result; that is the population effect size is close to zero and the results of the original studies were merely statistical flukes.

Strack’s first response to the RRR results was surprise because numerous studies had conducted replication studies of this fairly famous study before and the published studies typically, but not always, reported successful replications. Any naive reader of the literature, review articles, or textbook is likely to have the same response. If an article has over 600 citations, it suggests that it made a solid contribution to the literature.

However, social psychology is not a normal psychological science. Even more famous effects like the ego-depletion effect or elderly priming have failed to replicate. A major replication attempted that was published in 2015 showed that only 25% of social psychological studies could be replicated (OSC, 2015); and Strack had commented on this result. Thus, I was a bit surprised by Strack’s surprise because the failure to replicate his results was in line with many other replication failures since 2011.

Despite concerns about the replicabilty of social psychology, Strack expected a positive result because he had conducted a meta-analysis of 20 studies that had been published in the past five years.

If 20 previous studies successfully replicated the effect and the 17 studies of the RRR all failed to replicate the effect, it suggests the presence of a moderator; that is some variation between these two sets of studies that explains why the nonRRR studies found the effect and the RRR studies did not.

Moderator 1

First, the authors have pointed out that the original study is “commonly discussed in introductory psychology courses and textbooks” (p. 918). Thus, a majority of psychology students was assumed to be familiar with the pen study and its findings.

As the study used deception, it makes sense that the study does not work if students know about the study. However, this hypothesis assumes that all 17 samples in the RRR were recruited from universities in which the facial feedback hypothesis was taught before they participated in the study. Moreover, it assumes that none of the samples in the successful nonRRR studies had the same problem.

However, Strack does not compare nonRRR studies to RRR studies. Instead, he focuses on three RRR samples that did not use student samples (Holmes, Lynott, and Wagenmakers). None of the three samples individually show a significant result. Thus, none of these studies replicated the original findings. Strack conducts a meta-analysis of these three studies and finds an average mean difference of d = .16.

Table 1

| Dataset | N | M-teeth | M-lips | SD-teeth | SD-lips | Cohen’s d |

| Holmes | 130 | 4.94 | 4.79 | 1.14 | 1.3 | 0.12 |

| Lynott | 99 | 4.91 | 4.71 | 1.49 | 1.31 | 0.14 |

| Wagenmakers | 126 | 4.54 | 4.18 | 1.42 | 1.73 | 0.23 |

| Pooled | 355 | 4.79 | 4.55 | 1.81 | 2.16 | 0.12 |

The standardized effect size of d = .16 is the unweighted average of the three d-values in Table 1. The weighted average is d = .17. However, if the three studies are first combined into a single dataset and the standardized mean difference is computed from the combined dataset, the standardized mean difference is only d = .12

More importantly, the standard error for the pooled data is 2 / sqrt(355) = 0.106, which means the 95% confidence interval around any of these point estimates is 0.106 * 1.96 = .21 standard deviations wide on each side of the point estimate. Even with d = .17, the 95%CI (-.04 to .48) includes 0. At the same time, the effect size in the original study was approximately d ~ .5, suggesting that the original results were extreme outliers or that additional moderating factors account for the discrepancy.

Strack does something different. He tests the three proper studies against the average effect size of the “improper” replication studies with student samples. These studies have an average effect size of d = -0.03. This analysis shows a significant difference (proper d = .16 and improper d = -.03) , t(15) = 2.35, p = .033.

This is an interesting pattern of results. The significant moderation effect suggests that that facial feedback effects were stronger in the 3 studies identified by Strack than in the remaining 14 studies. At the same time, the average effect size of the three proper replication studies is still not significant, despite a pooled sample size that is three times larger than the sample size in the original study.

One problem for this moderator hypothesis is that the research protocol made it clear that the study had to be conducted before the original study was covered in a course (Simons response to Strack). However, maybe student samples fail to show the effect for another reason.

The best conclusion that can be drawn from these results is that the effect may be greater than zero, but that the effect size in the original studies was probably inflated.

Cartoons were not funny

Strack’s second concern is weaker and he is violating some basic social psychological rules about argument strength. Adding weak arguments increases persuasiveness if the audience is not very attentive, but they backfire with an attentive audience.

Second, and despite the obtained ratings of funniness, it must be asked if Gary Larson’s The Far Side cartoons that were iconic for the zeitgeist of the 1980s instantiated similar psychological conditions 30 years later. It is indicative that one of the four exclusion criteria was participants’ failure to understand the cartoons.

One of the exclusion criteria was failure to understand the cartoons, but how many participants were excluded because of this criterion? Without this information, this is clearly not relevant and if it was an exclusion criterion and only participants who understood the cartoons were used it is not clear how this could explain the replication failure. Moreover, Strack just tried to claim that proper studies did show the effect, which they could not show if the cartoons were not funny. Finally, the means clearly show that participants reported being amused by the cartoons.

Weak arguments like this undermine more reasonable arguments like the previous one.

Using A Camera

Third, it should be noted that to record their way of holding the pen, the RRR labs deviated from the original study by directing a camera on the participants. Based on results from research on objective self-awareness, a camera induces a subjective self-focus that may interfere with internal experiences and suppress emotional responses.

This is a serious problem and in hindsight the authors of the RRR are probably regretting the decision to use cameras. A recent article actually manipulated the presence or absence of a camera and found stronger effects without a camera, although the predicted interaction effect was not significant. Nevertheless, the study suggested that creating self-awareness with a camera could be a moderator and might explain why the RRR studies failed to replicate the original effect.

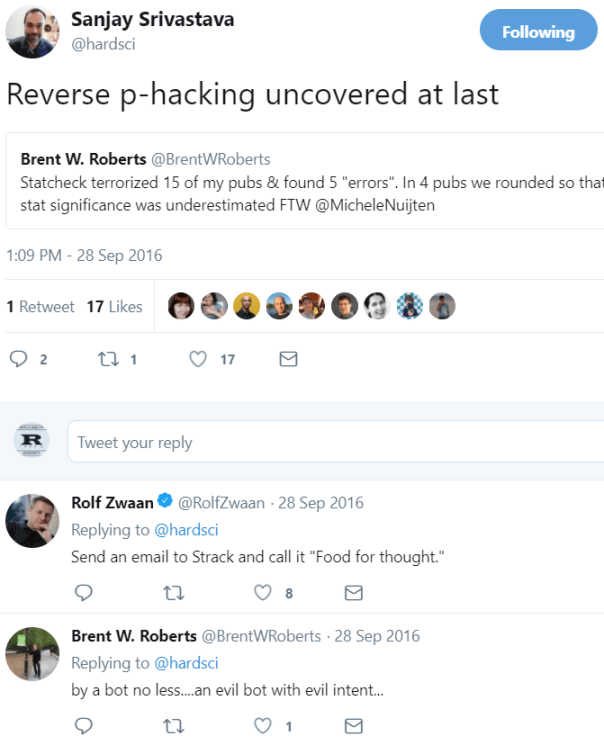

Reverse p-hacking

Strack also noticed a statistical anomaly in the data. When he correlated the standardized mean differences (d-values) with the sample sizes, a non-significant positive correlation emerged, r(17) = .45, p = .069. This correlation shows a statistical trend for larger samples to produce larger effect sizes. Even if this correlation were significant, it is not clear what conclusions should be drawn from this observation. Moreover, earlier Strack distinguished student samples and non-student samples as a potential moderator. It makes sense to include this moderator in the analysis because it could be confounded with sample size.

A regression analysis shows that the effect of proper sample is no longer significant, t(14) = 1.75, and the effect of sampling error (1/sqrt(N) is also not significant, t(14) = 0.59.

This new analysis suggests that sample size is not a predictor of effect sizes, which makes sense because there is no reasonable explanation for such a positive correlation.

However, now Strack makes a mistake by implying that the weaker effect sizes in smaller samples could be a sign of “reverse p-hacking.”

Without insinuating the possibility of a reverse p hacking, the current anomaly

needs to be further explored.

The rhetorical vehicle of “Without insinuating” can be used to backtrack from the insinuation that was never made, but Strack is well aware of priming research and the ironic effect of instructions “not to think about a white bear” (you probably didn’t think about one until now and nobody was thinking about reverse p-hacking until Strack mentioned it).

Everybody understood what he was implying (reverse p-hacking = intentional manipulations of the data to make significance disappear and discredit original researchers in order to become famous with failures to replicate famous studies) (Crystal Prison Zone blog; No Hesitations blog).

The main mistake that Strack makes is that a negative correlation between sample size and effect size can suggest that researches were p-hacking (inflating effect sizes in small samples to get significance), but a positive correlation does not imply reverse p-hacking (making significant results disappear). Reverse p-hacking also implies a negative trend line where researchers like Wagenmakers with larger samples (the 2nd largest sample in the set), who love to find non-significant results that Bayesian statistics treat as evidence for the absence of an effect, would have to report smaller effect sizes to avoid significance or Bayes-Facotors in favor of an effect.

So, here is Strack’s fundamental error. He correctly assumes that p-hacking results in a negative correlation, but he falsely assumes that reverse p-hacking would produce a positive correlation and then treats a positive correlation as evidence for reverse p-hacking. This is absurd and the only way to backtrack from this faulty argument is to use the “without insinuating” hedge (“I never said that this correlation implies reverse p-hacking, in fact I made clear that I am not insinuating any of this.”)

Questionable Research Practices as Moderator

Although Strack mentions two plausible moderators (student samples, camera), there are other possible moderators that could explain the discrepancies between his original results and the RRR results. One plausible moderator that Strack does not mention is that the original results were obtained with questionable research practices.

Questionable research practices is a broad term for a variety of practices that undermine the credibility of published results (John et al., 2012), including fraud.

To be clear, I am not insinuating that Strack fabricated data and I have said so in public before. Let’ me be absolutely clear because the phrasing I used in the previous sentence is a stab at Strack’s reverse p-hacking quote, which may be understood as implying exactly what is not being insinuated. I positively do not believe that Fritz Strack faked data for the 1988 article or any other article.

One reason for my belief is that I don’t think anybody would fake data that produce only marginally significant results that some editors might reject as insufficient evidence. If you fake your data, why fake p = .06, if you can fake p = .04?

If you take a look at the Figure above, you see that the 95%CI of the original study includes zero. That shows that the original study did not show a statistically significant result. However, Strack et al. (1988) used a more liberal criterion of .10 (two-tailed) or .05 (one-tailed) to test significance and with this criterion the results were significant.

The problem is that it is unlikely for two independent studies to produce two marginally significant results in a row. This is either an unlikely fluke or some other questionable research practices were used to get these results. So, yes, I am insinuating that questionable research practices may have inflated the effect sizes in Strack et al.’s studies and that this explains at least partially why the replication study failed.

It is important to point out that in 1988, questionable research practices were not considered questionable. In fact, experimental social psychologists were trained and trained their students to use these practices to get significance (Stangor, 2012). Questionable research practices also explain why the reproducibilty project could only replicate 25% of published results in social and personality psychology (OSC, 2015). Thus, it is plausible that QRPs also contributed to the discrepancy between Strack et al.’s original studies and the RRR results.

The OSC reproducibility project estimated that QRPs inflate effect sizes by 100%. Thus, an inflated effect size of d = .5 in Strack et al., (1988) might correspond to a true effect size of d = .25 (.25 real + 25 inflation = .50 observed). Moreover, the inflation increases as p-values get closer to .05. Thus, for a marginally significant result, inflation is likely to be greater than 100% and the true effect size is likely to be even smaller than .25. This suggest that the true effect size could be around d = .2, which is close to the effect size estimate of the “proper” RRR studies identified by Strack.

A meta-analysis of facial feedback studies also produced an average effect size estimate of d = .2, but this estimate is not corrected for publication bias, while the meta-analysis also showed evidence of publication bias. Thus, the true average effect size is likely to be lower than .2 standard deviations. Given the heterogeneity of studies in this meta-analysis it is difficult to know which specific paradigms are responsible for this average effect size and could be successfully replicated (Coles & Larsen, 2017). The reason is that existing studies are severely underpowered to reliably detect effects of this magnitude (Replicability Report of Facial Feedback studies).

The existing evidence suggests that effect sizes of facial feedback studies are somewhere between 0 and .5, but it is impossible to say whether it is just slightly above zero with no practical significance or whether it is of sufficient magnitude so that a proper set of studies can reliably replicate the effect. In short, 30 years and over 600 citations later, it is still unclear whether facial feedback effects exist and under which conditions this effect can be observed in the laboratory.

Did Fritz Strack use Quesitonable Research Practices

It is difficult to demonstrate conclusively that a researcher used QRPs based on a couple of statistical results, but it is possible to examine this question with larger sets of data. Brunner & Schimmack (2018) developed a method, z-curve, that can reveal the use of QRPs for large sets of statistical tests. To obtain a large set of studies, I used automatic text extraction of test statistics from articles co-authored by Fritz Strack. I then applied z-curve to the data. I limited the analysis to studies before 2010 when social psychologists did not consider QRPs problematic, but rather a form of creativity and exploration. Of course, I view QRPs differently, but the question whether QRPs are wrong or not is independent of the question whether QRPs were used.

This figure (see detailed explanation here) shows the strength of evidence (based on test statistics like t and F-values converted into z-scores in Strack’s articles. The histogram shows a mode at 2, which is just significant (z = 1.96 ~ p = .05, two-tailed). The high bar of z-scores between 1.8 and 2 shows marginally significant results as does the next bar with z-scores between 1.6 and 1.8 (1.65 = .05 one-tailed). The drop from 2 to 1.6 is too steep to be compatible with sampling error.

The grey line provides a vague estimate of the expected proportion of non-significant results. The so called file-drawer (non-significant results that are not reported) is very large and it is unlikely that so many studies were attempted and failed. For example, it is unlikely that Strack conducted 10 more studies with N = 100 participants and did not report the results of these studies because they produced non-significant result. Thus, it is likely that other QRPs were used that help to produce significance. It is impossible to say how significant results were produced, but the distribution z-scores strongly suggests that QRPS were used.

The z-curve method provides an estimate of the average power of significant results. The estimate is 63%. This value means that a randomly drawn significant result from one of Strack’s articles has a 63% probability of producing a significant results again in an exact replication study with the same sample size.

This value can be compared with estimates for social psychology using the same method. For example, the average for the Journal of Experimental Social Psychology from 2010-2015 is 59%. Thus, the estimate for Strack is consistent with general research practices in his field.

One caveat with the z-curve estimate of 63% is that the dataset includes theoretically important and less important tests. Often the theoretically important tests have lower z-scores and the average power estimates for so-called focal tests are about 20-30 percentage points lower than the estimate for all tests.

A second caveat is that there is heterogeneity in power across studies. Studies with high power are more likely to produce really small p-values and larger z-scores. This is reflected in the estimates below the x-axis for different segments of studies. The average for studies with just significant results (z = 2 to 2.5) is only 45%. The estimate for marginally significant results is even lower.

As 50% power corresponds to the criterion for significance, it is possible to use z-curve as a way to adjust p-values for the inflation that is introduced by QRPs. Accordingly, only z-scores greater than 2.5 (~ p = .01) would be significant at the 5% level after correcting for QRPs. However, once QRPs are being used, bias-corrected values are just estimates and validation with actual replication studies is needed. Using this correction, Strack’s original results would not even meet the weak standard of for marginal significance.

Final Words

In conclusion, 30 years of research have failed to provide conclusive evidence for or against the facial feedback hypothesis. The lack of progress is largely due to a flawed use of the scientific method. As journals published only successful outcomes, empirical studies failed to provide empirical evidence for or against a hypothesis derived from a theory. Social psychologists only recognized this problem in 2011, when Bem was able to provide evidence for an incredible phenomenon of erotic time travel.

There is no simple answer to Fritz Strack’s question “Have I done something wrong?” because there is no objective standard to answer this question.

Did Fritz Strack fail to produce empirical evidence for the facial feedback hypothesis because he used the scientific method wrong? I believe the answer is yes.

Did Fritz Strack do something morally wrong in doing so? I think the answer is no. He was trained and trained students in the use of a faulty method.

A more difficult question is whether Fritz Strack did something wrong in his response to the replication crisis and the results of the RRR.

We can all make honest mistakes and it is possible that I made some honest mistakes when I wrote this blog post. Science is hard and it is unavoidable to make some mistakes. As the German saying goes (Wo gehobelt wird fallen Späne) which is equivalent to saying “you can’t make an omelette without breaking some eggs.

Germans also have a saying that translates to “those who work a lot make a lot of mistakes, those who do nothing make no mistakes” Clearly Fritz Strack did a lot for social psychology, so it is only natural that he also made mistakes. The question is how scientists respond to criticism and discovery of mistakes by other scientists.

The very famous social psychologists Susan Fiske (2015) encouraged her colleagues to welcome humiliation. This seems a bit much, especially for Germans who love perfection and hate making mistakes. However, the danger of a perfectionistic ideal is that criticism can be interpreted as a personal attack with unhealthy consequences. Nobody is perfect and the best way to deal with mistakes is to admit them.

Unfortunately, many eminent social psychologists seem to be unable to admit that they used QRPs and that replication failures of some of their famous findings are to be expected. It doesn’t require rocket science to realize that p-hacked results do not replicate without p-hacking. So, why is it so hard to admit the truth that everybody knows anyways?

It seems to be human nature to cover up mistakes. Maybe this is an embodied reaction to shame like trying to cover up when a stranger sees us naked. However, typically this natural response is worse and it is better to override it to avoid even more severe consequences. A good example is Donald Trump. Surely, having sex with a pornstar is a questionable behavior for a married man, but this is no longer the problem for Donald Trump. His presidency may end early not because he had sex with Stormy Daniels, but because he lied about it. As the saying goes, the cover-up is often worse than the crime. Maybe there is even a social psychological experiment to prove it, p = .04.

P.S. There is also a difference between not doing something wrong and doing something right. Fritz, you can still do the right thing and retract your questionable statement about reverse-phacking and help new generations to avoid some of the mistakes of the past.

References

Strack, F. (2016). Reflection on the smiling registered replication report. Perspectives on Psychological Science, 11(6), 929–930. https://doi.org/10.1177/1745691616674460